Snowflake Data Warehouse

JVM AGENT

Item Type Support

SIGNALS SCALARS CONDITION

Overview

The SQL connector enables Seeq to access data from Snowflake.

Prerequisites

You will need some information to configure a connection to your Snowflake Data Warehouse.

Determine the Connectivity Requirements

Prior to determining where to make the connection from, you will need to identify how you can connect to Snowflake.

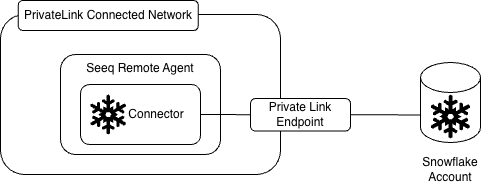

Are you leveraging PrivateLink Connectivity?

If you are leveraging PrivateLink you will want to determine which part of the network has access to that PrivateLink endpoint. You will need to deploy a remote agent in that network segment and make the connection from there.

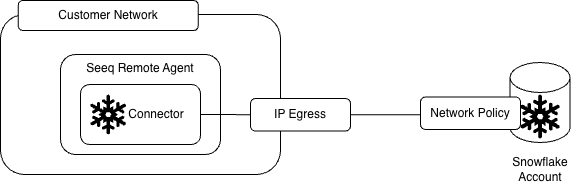

Do you have a network policy implemented in Snowflake?

If you have a network policy in place, you will want to determine where you should deploy your remote agent so that it’s external IP matches IP’s allowed within the network policy.

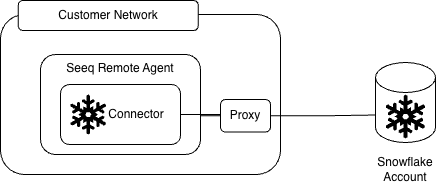

Are you leveraging a proxy between the remote agent and Snowflake?

You will need to leverage the JDBC properties configuration item below to set the Proxy information.

"JdbcProperties":{

"nonProxyHosts":"<snowflake address>",

"proxyHost":"<proxy host>",

"proxyPort":<proxy port>,

"useProxy":"true"

}Account

Your account is the subdomain of your base URL. For example, if you access https://my-company.snowflakecomputing.com/, your account would be my-company.

Warehouse

Seeq will need an existing warehouse to perform any queries.

User Account

Seeq needs an account to make queries. This account should have the Timezone set to UTC to avoid having to set this parameter per session. This can be achieved with the following command:

ALTER USER SET TIMEZONE = 'UTC';Further information can be found here: https://docs.snowflake.com/en/sql-reference/parameters#timezone.

Authentication

There are three authentication mechanisms supported by the Snowflake Database type - Username / Password, key pair, and External OAuth. External OAuth is supported when a security integration with the External OAuth type has been created. To use username/password authentication, leave the OAuth-specific fields set to null (ClientId, ClientSecret, TokenUrl) and set username and password values accordingly. To use OAuth leave the password field null. To use key pair authentication, you will need to have an RSA key pair in PEM format. This public key will have to be associated with a user in Snowflake. The private key will have to be stored on the agent where connectivity is being made from. You will enter the username and leave the password field as null and leave the OAuth-specific fields set to null (ClientId, ClientSecret, TokenUrl).

Setting up Key Pair Authentication

Ensure you have a key pair created for your user. The public key will have to be registered to the user in Snowflake by executing (as a Security Admin):

CODEalter user <USERNAME> set rsa_public_key='<contents of rsa_key.pub without headers>';The private key needs to be stored on the remote agent. We suggest using the

${SEEQ_DATA}\keysfolder to store your private key.In the configuration you will enter the following JDBC Property:

CODE"JdbcProperties": { "private_key_file": "${SEEQ_DATA}\\keys\\rsa_key.pem" }

Setting up External OAuth

Confirm that the Snowflake security integration has been properly created in your account

The first step to enable External OAuth is to ensure the security integration has been configured in your Snowflake account. You will want to ensure the EXTERNAL_OAUTH_TOKEN_USER_MAPPING_CLAIM is set to the token claim that contains the User Name of the user created within Snowflake. If no claim matches the user name you will need to specify EXTERNAL_OAUTH_SNOWFLAKE_USER_MAPPING_ATTRIBUTE so that the token can be associated with the correct user record in Snowflake. Note the User Name as this will be the user name that is used to log into Snowflake.

Ensure that your Identity Provider (Okta, Ping, AzureAD, Custom) supports the client credentials grant

Because Seeq leverages a service account to access data from Snowflake, the identity provider will have to support the client credentials grant type for the token request. This is the standard for machine-to-machine communication.

Confirm the Scope that was used to setup the Security Integration on the Identity Provider

The scope will have been set on the Identity Provider. The scope will begin with the prefix session:role. This will need to be recorded and configured in the Scope configuration parameter described below.

Retrieve the token URL from your Identity Provider’s Metadata URI endpoint

This will be the TokenUrl configuration parameter required for the configuration detailed below.

Create an application within your Identity Provider and note the ClientID and ClientSecret

These values will be used in the ClientIdand ClientSecret fields respectively.

Test the application credentials

The application credentials can be tested with a cURL request to your token URI:

curl -X POST -H 'Content-Type: application/x-www-form-urlencoded;charset=UTF-8' \

-d 'client_id=<ClientId>' \

-d 'grant_type=client_credentials' \

-d 'client_secret=<ClientSecret>' \

-d 'scope=<Scope>' \

<TokenUrl>This should return a token in a JSON Payload that looks like the following:

{

"token_type": "Bearer",

"expires_in": 3600,

"access_token": "<token>",

"scope": "<scope>"

}The token can be tested in a Snowflake workbook with the command:

select system$verify_external_oauth_token('<token>');The result should be a message reading Token Validation finished with a payload of:

{

"Validation Result": "Passed",

"Issuer": "<token issuer>",

"Extracted User claim(s) from token": "<Username>"

}You will want to ensure that the value for Extracted User claim(s) from token matches the Username that is created in Snowflake (or the attribute that it is mapped to). If you did not get a Validation Result of Passed, then you will want to validate your configuration.

Configuration

This is an example configuration template that is displayed in the Additional Configuration box that appears when you click Configure for an existing datasource (or if a new datasource is being created, in the Create new datasource connection modal that appears after clicking Add Datasource and choosing the Snowflake template) on the Datasources administration page.

{

"QueryDefinitions": null,

"Type": "SNOWFLAKE",

"Hostname": null,

"Port": 0,

"DatabaseName": null,

"Username": "",

"Password": null,

"InitialSql": null,

"TimeZone": "UTC",

"PrintRows": false,

"UseWindowsAuth": false,

"SqlFetchBatchSize": 100000,

"JdbcConnectionStringOverride": null,

"Account": "",

"Warehouse": "",

"ExpandQueryRange": true,

"AlterSnowflakeSessionTimezone": true,

"ShouldMonitor": false,

"JdbcProperties": null,

"TokenUrl": "",

"ClientId": "",

"ClientSecret": "",

"Scope": ""

}Standard SQL Additional Configuration

Property Name | Default Value | Data Type | Description |

|---|---|---|---|

| null | Array[QueryDefinition] | A list of queries that Seeq will use to generate signals, conditions and scalars. See SQL Query Definition Excerpt for details. |

| null | String | The hostname of your datasource. If your hostname is of the form |

| 1,000,000 | Integer | The maximum number of signals that can be indexed from a single query definition. This value is here to protect against incorrect query definitions producing many millions of invalid signals. |

| 0 | Integer | The port for the JDBC Connection. |

| null | String | Optional: Can be defined here or as part of a fully qualified table name in the QueryDefinition. |

| “" | String | The user name |

| null | String/SecretFile | The user password. |

| null | String | Optional: Can be specified if you have a known, functioning JDBC connection string. If specified, |

| null | String | Optional: A SQL command that would be run one upon establishing a connection. |

| null | String | Optional: The time zone to use for timestamp or datetime columns. For example, to set this to US Pacific Time, you would use |

| false | Boolean | The rows from the SQL query will be printed to the jvm-link log. This is for debugging purposes only, and should be set to |

| false | Boolean | Note: This is not available for all Database Types. If you are using a database type that supports Windows Authenication, you will need to ensure that the remote agent is running as the correct user. |

| null | Map<String, String> | A map of key value pairs that are configured as connection properties |

| null | Integer - {0,1} | Specifies the minimum number of idle connections to maintain in the connection pool. If not explicitly specified, it defaults to a database-specific value, typically 1. For Databricks, it defaults to 0 to prevent the cluster from being kept running but it can be changed to 1 if needed. |

Time Zone

Some SQL date and/or time column types have no zone information. The TimeZone field is available to specify the time zone that Seeq should use for data coming from columns types that have no time zone information of their own. UTC offsets (+01:00, -10:30, etc.) and IANA regions (America/Los_Angeles) are accepted. If no time zone is specified, Seeq defaults to the local region of the Seeq server. If your data was stored in UTC time, set this field to "UTC" or "+00:00". If your data was entered using a "wall clock", set this to the IANA time region of the "wall clock". Note that offsets are constant throughout the year whereas a region may observe daylight savings time. If you used a wall clock in a location that observes daylight savings time, a region is a better choice than an offset for this field. A list of IANA regions (tz database time zones) can be found here.

Snowflake-Specific SQL Additional Configuration

Property Name | Default Value | Data Type | Description |

|---|---|---|---|

|

| String (required) | The account name to be used for the connection. Null can be entered and the connector will infer the account name from the host name. If the incorrect account name is entered, you will see a |

|

| String (required) | The warehouse to use for the query. This is required if there is no default warehouse for the account. |

| true | Boolean | If true, the query range will be expanded to include the maximum interpolation range for a signal. This will avoid costly LIMIT 1 queries. Only set to false if you have a large maximum interpolation range and high frequency data. |

| false | Boolean | Only If true, the connector will attempt to alter the time zone session parameter to UTC. Ideally, this parameter should be set as per the perquisites section above. |

| false | Boolean | Will turn off connection monitoring which causes a request once every 5 seconds. This request does not use warehouse time, but does count against your cloud services credits. |

| Empty | String | The URL of the token endpoint from your identity provider. |

| Empty | String | The ClientId of the service principal created in your identity provider |

| Empty | String/SecretFile | The ClientSecret of the service principal created in your identity provider |

| Empty | String | The scope configured in the Identity Provider and Snowflake. |

Setting the Role

The Snowflake role can be set by passing in a JDBC Property called role to the JdbcProperties configuration property shown above:

"JdbcProperties": {

"role": "my_role"

}Known Issues

Using the cast operator is not supported. Instead an explicit cast will need to be made. For example, instead of this:

SELECT '2022-04-01'::DATE;You will need to use this:

SELECT CAST('2022-04-01' AS DATE);

--OR:

SELECT TO_DATE('2022-04-01');Troubleshooting

net.snowflake.client.jdbc.SnowflakeSQLException: Fail : Network policy is required

This error originates from Snowflake’s network-policy enforcement rules.

When a Snowflake account or user is configured as a service user (TYPE = 'SERVICE'), Snowflake requires an explicit network policy to be attached before any programmatic (non-interactive) login can occur. If no network policy is assigned and a Programmatic Access Token (PAT) or other automated credential is in use, Snowflake will block the connection and return:

Option 1 – Add a network policy (recommended)

Assign a simple policy to the service user that specifies the public IP or PrivateLink endpoint of the Seeq Remote Agent.

CREATE OR REPLACE NETWORK POLICY SEEQ_RA_POLICY

ALLOWED_IP_LIST = ('<agent_public_ip>');

ALTER USER <RA_USER> SET NETWORK_POLICY = SEEQ_RA_POLICY;

Then, if needed, convert the user to a service user:

ALTER USER <RA_USER> SET TYPE = 'SERVICE';Other Potential Errors

A couple of errors that you may encounter are:

String-valued samples are prohibited in numeric-valued signal

If the y-axis value of the signal is a string, then the Value Unit Of Measure property is required and must be set to "string". See Example 2.

Since the Value Unit Of Measure is different for string and numeric signals, it may be easiest to write one query definition for the numeric signals and write another for the string signals. Alternatively, the Value Unit Of Measure property could be set according to an SQL IF statement similar to the technique used in Example 13.

Samples must be ordered by their keys

If this is occurring when trending near the daylight savings transition, this is an indication that the TimeZone is not configured properly. For example, if TimeZone is set to "America/Los_Angeles", this means that the timestamp data in the SQL table was recorded using "America/Los_Angeles" time (Pacific) which observes daylight savings. During the spring daylight savings transition, time skips from 01:59:59.9 to 03:00:00.0 which means that the 02:00 hour doesn't exist and therefore there should be no data in the SQL table during that 02:00 hour. Any data in the 02:00 hour is interpreted as being in the 03:00 hour. If there is also data in the 03:00 hour, the samples will be out of order.

Original data: | 01:15, 01:45, 02:15, 02:45, 03:15 |

After accounting for non-existent 02:00 hour: | 01:15, 01:45, 03:15, 03:45, 03:15 |

If data exists in the 02:00 hour, it must mean it was either recorded in error or was recorded in a time zone that doesn't observe daylight savings such as UTC or a constant offset from UTC.

For more information, see the TimeZone field in the Configuration section above.

Warning when Indexing with “TestQueriesDuringSync” enabled:

<Query Definition SQL> will fail to retrieve samples. Please correct the Query Definition. Error: net.sf.jsqlparser.JSQLParserException: Couldn't parse SQL statement <Query Definition SQL>

Error when retrieving data for SQL Connector Signals and Conditions:

Error: net.sf.jsqlparser.JSQLParserException: Couldn't parse SQL statement <Query Definition SQL>

The warning and error above may have a common cause. These can occur when the SQL statements in a “QueryDefiniton” do not properly wrap identifiers that share a value with JSql Parser Restricted Keywords in double quotation marks. Refer to the Connector Rules > General section of Creating a Query Definition for the SQL Connector for details about this restriction and how to accommodate it in your queries.

net.snowflake.client.jdbc.SnowflakeSQLException: Bad request; operation not supported.

Likely your Account value in your configuration is incorrect. As a first step, set this value to null and allow the JDBC driver to infer the account name from the hostname.

If you are running into issues with connecting to or access data from Snowflake, view our guide for troubleshooting datasource issues.

Performance considerations

When using filter criteria in a where clause, you will want to ensure you have properly set up your clustering keys.

Evaluating execution times can be helpful when trying to determine the cause of a slow query.

Ensure you are using timestamp types for your time columns vs having your timestamps as strings or the results of concatenations.

Querying data from a table vs a stage will generally be much more performant.

View our guide on optimizing datasource performance for general guidance.