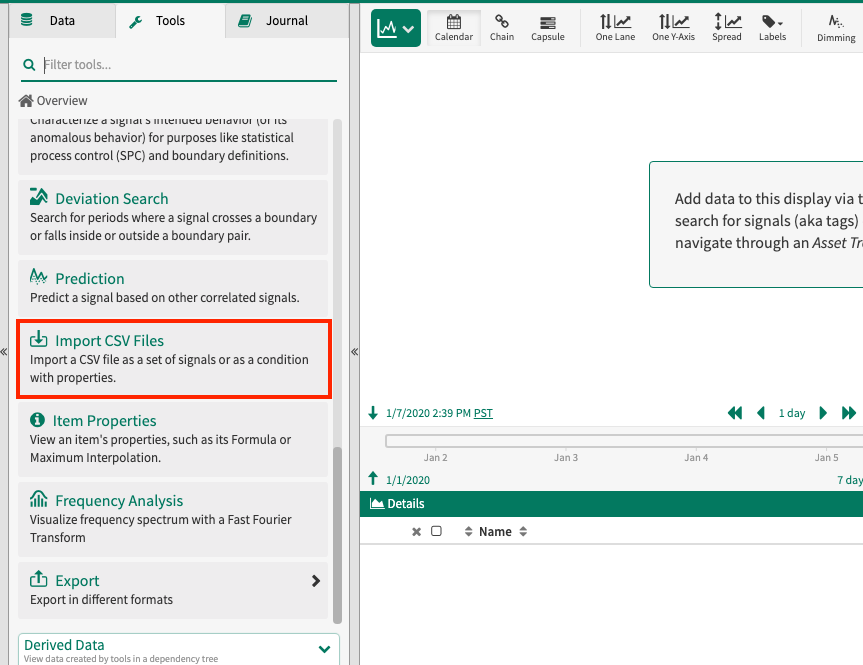

Import CSV Files

Overview

There are often cases where you want a quick way to view time-series data from a text file. Seeq provides a way to read Comma Separated Value (CSV) files; a widely used standard for text-based data files.

For example perhaps you want to view some lab sample results that are stored in a text file, but you want to see the data on trends next to your other process data from a historian. This article provides step-by-step instructions to get your text CSV data into Seeq for further analysis.

Note: Seeq does not keep a copy of the CSV file provided. We recommend that users keep copies of their files for future reference.

Datafile

A CSV import creates a Datafile which contains the signals/condition that result from the import of that CSV file.

Import Requirements

CSV Import has the following file size limits. If your files are larger, split them up or ask your Seeq admin to increase the CSV limits which can be found in Configuring Seeq. The limits are in place to keep large files from swamping the system. Seeq does not test files larger than these limits. Increase these limits at your own risk.

500 MB

1000 columns

1.1 million rows

A file can be used for signal(s) import or condition import but not both at the same time.

Signals:

One key column per file per import. If the file has more than one key column possibility, import that file again and choose a different key column.

Many signals can be imported at once. Each column of the file can be mapped to a signal.

Within a signal, there can be only one sample per key. If there are more than one sample per key, the most recently uploaded sample will replace any sample uploaded earlier.

Conditions:

One condition per import (in other words, one set of start, end columns per import). If you'd like to create multiple conditions from one file, import the file again choosing different columns.

Within a condition, there can only be one capsule with identical start times, end times, and properties. However, there can be more than one capsule with identical start and end times if the properties differ between those capsules. Keep this in mind when appending additional capsules to an existing condition as it could impact whether capsules are overwritten or remain.

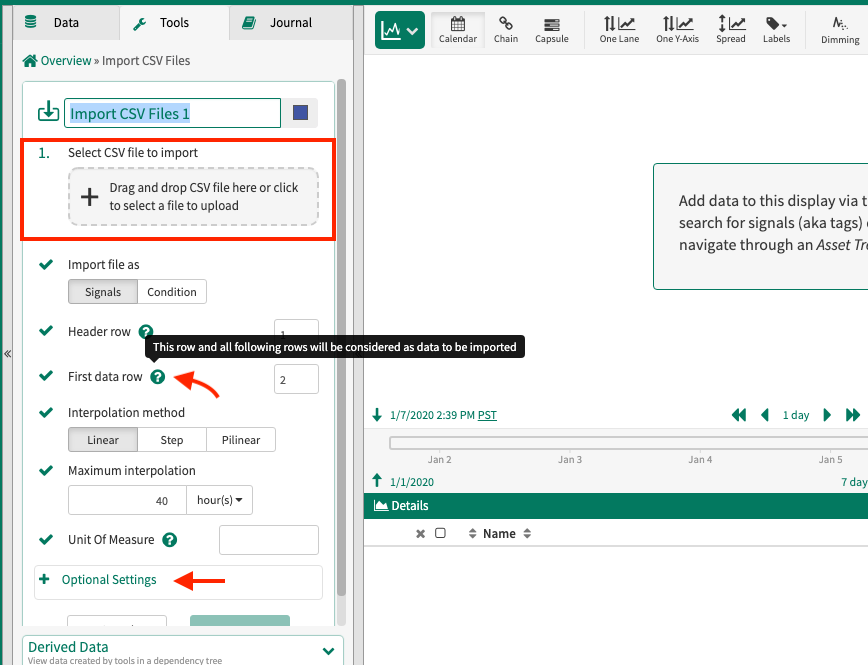

Header Row + Data Row Start

A header row with unique column names is required. The uniqueness test is case insensitive. For example, "Value" and "value" are not considered unique.

The header rows must come before the data rows.

Quotes

Quotes are not interpreted as a special character. A CSV row of 2020-10-04T00:00Z, 1, 2, "1,234", 5 will be interpreted as these entries:

2020-10-04T00:00Z12"1234"5

Supported Delimiters

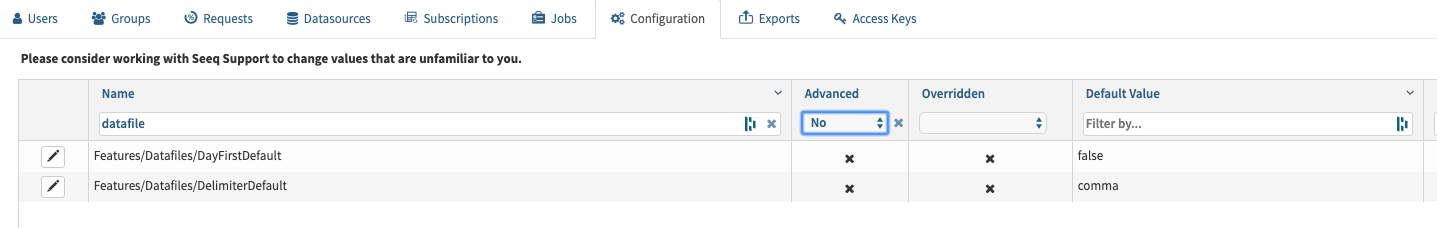

Comma, Semicolon, and Tab delimiters are supported. The factory default is comma; however, the default for each Seeq server can be configured. The Import UI still provides the opportunity to change the delimiter regardless of the default configuration.

Supported Decimal Formats

Comma and period as decimal are both supported. If using comma for the decimal, comma cannot be used as the delimiter. Be sure to change the delimiter import setting appropriately.

Supported Timestamp Formats

The same format must be used for the entirety of the key column.

If the timestamp includes time zone information, it will be used.

If the timestamp does not include time zone information, the time zone specified in the import UI will be used. If not specified in the UI, the Seeq server time zone will be used.

Day first dates are supported in addition to the already supported month first dates. This creates the potential for ambiguous dates.

Ambiguous Dates

07/04/2019 could be July 4, 2019 or April 7, 2019.

If a non-ambiguous date such as 13/4/2019 or 4/13/2019 can be found in the first few lines of the file, Seeq will automatically determine whether day-first or month-first dates were intended.

If Seeq cannot automatically determine day-first versus month-first, it will fall back upon the import setting dayFirstDefault. If true, the dates will be interpreted as day first. If false, the dates will be interpreted as month first. The factory default is dayFirstDefault = false however, the default for each Seeq server can be configured. The Import UI still provides the opportunity to change this setting regardless of the default configuration.

Supported Timestamps

Generally (unless stated otherwise)

Time not required

2 digit or 4 digit year supported

1 or 2 digit month, day, hour supported

Only 2 digit minutes and seconds supported

If providing time, hours and minutes are required but seconds are not required

Fractional seconds supported but not required. Nanoseconds are the smallest supported time unit.

ISO 8601:

date and time required

4 digit year, 2 digit month and day required

supported year month day separator: dash

supported date and time separators: T, space

Examples: ISO 8601

2016-01-08 22:52

2016-01-08T22:52:12Z

2016-01-08T22:52:12.123456789

2016-01-08 22:52:12.123456789Z

2016-01-08T22:52:12.123456789+00:00

2016-01-08T22:52:12.123456789 UTC

2016-01-08T14:52:12.123456789-08:00

2016-01-08T14:52:12.123456789 America/Los_AngelesMonth Day Year

2 or 4 digit year supported

1 or 2 digit month and day supported

supported month day year separator:

forward slashIf 12 hr clock, AM and PM are required and must be capitalized

Examples: Month Day Year 24Hr Clock

1/8/19 22:52

1/08/19 22:52:12

01/8/19 22:52:12.12345

01/08/2019 22:52:12.123456789

7/30/08 3:42

7/30/2008 03:42Examples: Month Day Year 12Hr Clock

1/8/19 10:52 PM

1/08/19 10:52:12 PM

01/08/19 10:52:12.123 PM

01/08/2019 10:52:12.123456789 PM

7/30/08 3:42 AM

7/30/2008 03:42 AM Seconds since Unix Epoch

fractional seconds since Unix Epoch

Example: 1452293532

Day First Keys

12 or 24 hour clock Supported

For 12 hr clocks, AM and PM are required but do not have to be capitalized

Time First Keys

Example: 17:04 15/03/2020

24 hour clock is supported but not 12 hour clock

Supported separators

One of many possible examples to illustrate: 2019-04-22T10:12:42.32

date separator (2019-04-22):

forward slash, dash, period, period space(The day/month separator does not have to match the month/year separator.)date followed by time separator (T):

T, space, period, period spacetime followed by date separator:

spacefractional seconds separator (42.32):

period, comma, colonhour minute seconds separator (10:12:42):

colon

Examples: Date Separator Variations

2019/04/22

2019-04-22

2019.04.22

2019. 04. 22

2019/04.22

etc.Examples: Variations on the Separator between Date followed by Time

2019-04-22

2019-04-22T

2019-04-22.

2019. 04. 22.

2019-04-22T10:12

2019-04-22 10:12

2019.04.22.10:12

2019. 04. 22. 10:12

2019.04.22 10:12

2019/04/22.10:12

etc.Examples: Variations on the Separator between Time followed by Date

14:12 22/4/19

14:12:13.23 04/22/2019

etc.Examples: Fractional Seconds Separator Variations

2019-04-22T10:12:15.123

2019-04-22T10:12:15,123

2019-04-22T10:12:15:123Year Month Day (ISO 8601 and similar):

4 digit year required

24 hour clock required

Examples: Year Month Day 24Hr Clock

2019-1-8

2019.01.8

2019/01/08

2019. 01. 08

2019. 1. 8.

2019/1/8T

2019.1.08.

2019-01-8/.

2019-01-08 22:52

2019-01-08T22:52:12Z

2019-01-08.22:52:12.12

2019-01-08 22:52:12,1234

2019-01-08T22:52:12:123456789Z

2019/01/08T22:52:12.123456789+00:00

2019/01-08T22:52:12.123456789+00:00

2019-01-08 22:52:12.123456789 UTC

2019.01.08.14:52:12.123456789-08:00

2019. 1.08 14:52:12.123456789 America/Los_Angeles

2019. 1. 8. 14:52:12.123456789 America/Los_Angeles

2019. 1.08 14:52:12.123456789 America/Los_Angeles

(and many other possible combinations)Month Day Year or Day Month Year

If using a 12 hr clock, AM and PM are required but do not have to be capitalized

Examples: Month Day Year or Day Month Year

1/8/19

1/08/2019

01/8/2019

1.8.19.

1-8-19T

1. 08. 2019.

01/8/19T

(and many other possible combinations)Examples: Month Day Year or Day Month Year followed by 12Hr Clock

1/8/19 10:52 PM

1-08-19T10:52:12AM

01.8.19.10:52:12.12345 pm

01. 08. 2019. 10:52:12,12345678 am

01/8-19.10:52:12:12345678 Pm

(and many other possible combinations)Examples: Month Day Year or Day Month Year followed by 24Hr Clock

1/8/19 22:52

1-08-19T22:52:12

01.8.19.22:52:12.12345

01. 08. 2019. 22:52:12,12345678

01/8-19.22:52:12:12345678

1/8/19 3:52

1-08-19T03:52:12

01.8.19.3:52:12.1

01. 08. 2019. 03:52:12,123

1-8. 2019T03:52:12:123456789

(and many other possible combinations)Examples: 24Hr Clock followed by Month Day Year or Day Month Year

22:52 1/8/19

22:52:12 1-08-19

22:52:12.12345 01.8.19.

22:52:12,12345678 01. 08. 2019.

22:52:12:12345678 01/8-19.

3:52 1/8/19

03:52:12 1-08-19

3:52:12.1 01.8.19.

03:52:12,123 01. 08. 2019.

03:52:12:123456789 1-8. 2019

(and many other possible combinations)Seconds since Unix Epoch

supported fractional seconds separators:

period, comma

Examples: Seconds Since Unix Epoch

1452293532

1452293532.

1452293532,

1452293532.12

1452293532,12345

1452293532.1234567

1452293532,123456789Importing a CSV File

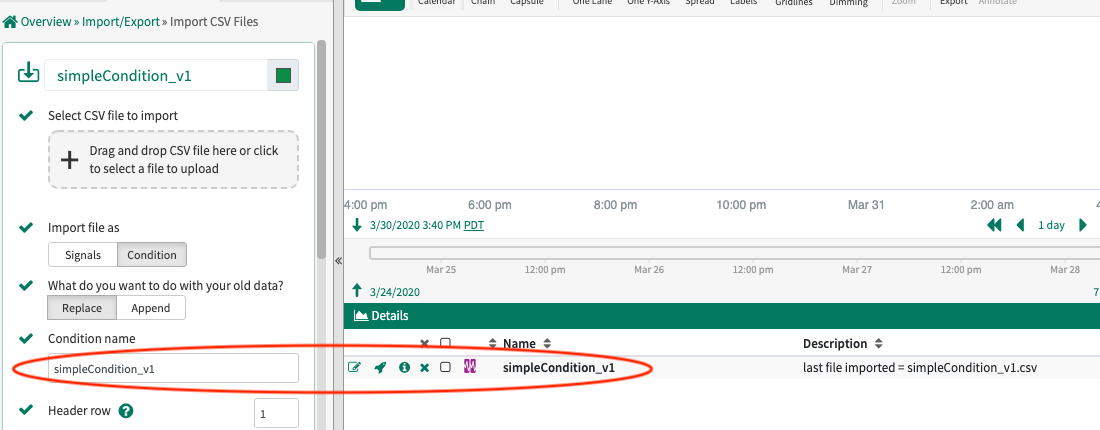

Replace and Append

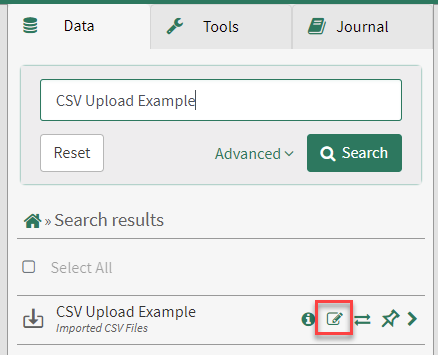

Another CSV file can be imported to an already existing Datafile.

Start by searching for the Datafile on the Data tab and then selecting edit.

If the new CSV file matches the original import, the condition or signals created by the original import will be modified. If the CSV file does not match the original import, an additional condition or signals will be created.

Replace: When replace is chosen, all previously imported samples/capsules of the matching signal/condition are deleted regardless of the date range of the data in the CSV file.

Append: When append is chosen, the previously imported samples/capsules remain unless:

The new file has samples at the same key as previously existing samples. Only one sample per key is kept. The most recently imported sample for the particular key is the sample that is kept.

The new file has capsules with identical start time, end time, and properties as a previously imported capsule. Only one identical capsule is kept. The most recently imported capsule is the capsule that is kept.

When appending or replacing data, the Datafile defaults to the import settings that were used previously to save the user time spent configuring import. These settings are just the default and can be changed as needed.

Modifying existing CSV conditions

When importing a file to an already existing Datafile, a condition created by the original import will be modified if the condition name specified in the import tool matches the name of the condition from the original import.

Modifying existing CSV signals

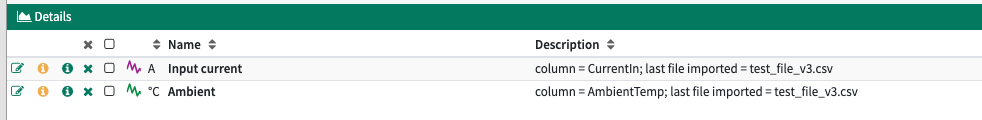

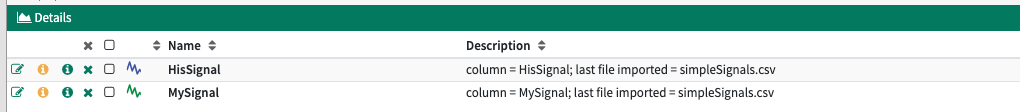

When importing a file to an already existing Datafile, signals created by the original import will be modified if the CSV file has column names that match the original import. The column name and file that the signal was imported from is logged in the item's description. Looking at the description can help plan signal modification.

However, in most cases, the signal name is named after the column making it easy to plan modifications.

The situations where the signal name differs from the column name are:

A name suffix and prefix were used during import in which case the signal name is "PrefixColumnNameSuffix".

The signal was imported using CSV V1 and then migrated in an upgrade to CSV V2. In this case, the signal name is very likely different than the column name.

Daylight Savings Tips

In the following examples, the 2016 daylight savings transitions in region America/Los_Angeles will be used as example.

Spring forward:

When local standard time was about to reach

Sunday, March 13, 2016,2:00:00 am clocks were turned forward 1 hour to

Sunday, March 13, 2016,3:00:00 am local daylight time instead

which means that the 2:00 am hour doesn't exist in local time.

Fall back:

When local daylight time was about to reach

Sunday, November 6, 2016,2:00:00 am clocks were turned backward 1 hour to

Sunday, November 6, 2016,1:00:00 am local standard time instead

which means that there are two 1:00 am hours in local time.

The best practice is to log CSV data in UTC time in which case there are no daylight savings headaches because there are no missing or repeated hours.

Los Angeles daylight savings transitions logged in UTC time

MyTime,MyValue

2016-03-13T08:00:00Z,7.0

2016-03-13T08:15:00Z,8.0

2016-03-13T08:30:00Z,5.0

2016-03-13T08:45:00Z,1.0

2016-03-13T09:00:00Z,3.0

2016-03-13T09:15:00Z,2.0

2016-03-13T09:30:00Z,6.0

2016-03-13T09:45:00Z,0.0

2016-03-13T10:00:00Z,4.0

2016-03-13T10:15:00Z,2.0

2016-03-13T10:30:00Z,4.0

2016-03-13T10:45:00Z,1.0

2016-03-13T11:00:00Z,7.0

2016-03-13T11:15:00Z,1.0

2016-03-13T11:30:00Z,0.0

2016-03-13T11:45:00Z,3.0

2016-11-06T07:00:00Z,7.0

2016-11-06T07:15:00Z,8.0

2016-11-06T07:30:00Z,5.0

2016-11-06T07:45:00Z,1.0

2016-11-06T08:00:00Z,3.0

2016-11-06T08:15:00Z,2.0

2016-11-06T08:30:00Z,6.0

2016-11-06T08:45:00Z,0.0

2016-11-06T09:00:00Z,5.0

2016-11-06T09:15:00Z,4.0

2016-11-06T09:30:00Z,8.0

2016-11-06T09:45:00Z,2.0

2016-11-06T10:00:00Z,9.0

2016-11-06T10:15:00Z,4.0

2016-11-06T10:30:00Z,8.0

2016-11-06T10:45:00Z,9.0

2016-11-06T11:00:00Z,4.0

2016-11-06T11:15:00Z,2.0

2016-11-06T11:30:00Z,4.0

2016-11-06T11:45:00Z,1.0

2016-11-06T12:00:00Z,7.0

2016-11-06T12:15:00Z,1.0

2016-11-06T12:30:00Z,0.0

2016-11-06T12:45:00Z,3.0The next best practice is to log CSV data in local time but including the offset in the timestamp.

Los Angeles daylight savings transitions logged in local time with offset information

MyTime,MyValue

2016-03-13T00:00:00-08:00,7.0

2016-03-13T00:15:00-08:00,8.0

2016-03-13T00:30:00-08:00,5.0

2016-03-13T00:45:00-08:00,1.0

2016-03-13T01:00:00-08:00,3.0 (1:00 am hour)

2016-03-13T01:15:00-08:00,2.0

2016-03-13T01:30:00-08:00,6.0

2016-03-13T01:45:00-08:00,0.0

2016-03-13T03:00:00-07:00,4.0 (3:00 am hour. Note the missing 2:00 am hour. The change in offset prevents confusion.)

2016-03-13T03:15:00-07:00,2.0

2016-03-13T03:30:00-07:00,4.0

2016-03-13T03:45:00-07:00,1.0

2016-03-13T04:00:00-07:00,7.0

2016-03-13T04:15:00-07:00,1.0

2016-03-13T04:30:00-07:00,0.0

2016-03-13T04:45:00-07:00,3.0

2016-11-06T00:00:00-07:00,7.0

2016-11-06T00:15:00-07:00,8.0

2016-11-06T00:30:00-07:00,5.0

2016-11-06T00:45:00-07:00,1.0

2016-11-06T01:00:00-07:00,3.0 (1st 1:00 am hour)

2016-11-06T01:15:00-07:00,2.0

2016-11-06T01:30:00-07:00,6.0

2016-11-06T01:45:00-07:00,0.0

2016-11-06T01:00:00-08:00,5.0 (2nd 1:00 am hour. The change in offset prevents confusion.)

2016-11-06T01:15:00-08:00,4.0

2016-11-06T01:30:00-08:00,8.0

2016-11-06T01:45:00-08:00,2.0

2016-11-06T02:00:00-08:00,9.0

2016-11-06T02:15:00-08:00,4.0

2016-11-06T02:30:00-08:00,8.0

2016-11-06T02:45:00-08:00,9.0

2016-11-06T03:00:00-08:00,4.0

2016-11-06T03:15:00-08:00,2.0

2016-11-06T03:30:00-08:00,4.0

2016-11-06T03:45:00-08:00,1.0

2016-11-06T04:00:00-08:00,7.0

2016-11-06T04:15:00-08:00,1.0

2016-11-06T04:30:00-08:00,0.0

2016-11-06T04:45:00-08:00,3.0

However, it is common for data to be logged in local time but without any offset information in the timestamp.

Los Angeles daylight savings transitions logged in local time without offset information

MyTime,MyValue

2016-03-13T00:00,7.0

2016-03-13T00:15,8.0

2016-03-13T00:30,5.0

2016-03-13T00:45,1.0

2016-03-13T01:00,3.0 (1:00 am hour)

2016-03-13T01:15,2.0

2016-03-13T01:30,6.0

2016-03-13T01:45,0.0

2016-03-13T03:00,4.0 (3:00 am hour. Note the missing 2:00 am hour.)

2016-03-13T03:15,2.0

2016-03-13T03:30,4.0

2016-03-13T03:45,1.0

2016-03-13T04:00,7.0

2016-03-13T04:15,1.0

2016-03-13T04:30,0.0

2016-03-13T04:45,3.0

2016-11-06T00:00,7.0

2016-11-06T00:15,8.0

2016-11-06T00:30,5.0

2016-11-06T00:45,1.0

2016-11-06T01:00,3.0 (1st 1:00 am hour)

2016-11-06T01:15,2.0

2016-11-06T01:30,6.0

2016-11-06T01:45,0.0

2016-11-06T01:00,5.0 (2nd 1:00 am hour. Time appears to have gone backward. This causes confusion. Is this 2nd hour the daylight shift? Or were two samples taken at the same time?)

2016-11-06T01:15,4.0

2016-11-06T01:30,8.0

2016-11-06T01:45,2.0

2016-11-06T02:00,9.0

2016-11-06T02:15,4.0

2016-11-06T02:30,8.0

2016-11-06T02:45,9.0

2016-11-06T03:00,4.0

2016-11-06T03:15,2.0

2016-11-06T03:30,4.0

2016-11-06T03:45,1.0

2016-11-06T04:00,7.0

2016-11-06T04:15,1.0

2016-11-06T04:30,0.0

2016-11-06T04:45,3.0When the data is logged in local time without timestamp information, the missing spring hour doesn't cause trouble. However, the intention of the repeated hour is ambiguous. Is that the daylight shift? Or were multiple measurements taken at the same timestamp?

Seeq attempts to infer the intention. When importing a CSV file, a time zone is specified. Seeq will check this time zone for its daylight savings fall back transition. If there is a repeated hour that aligns with the fall back transition, Seeq will assume that the 2nd 1:00 hour is a different hour than the 1st 1:00 hour.

There are a couple situations where Seeq is not able to infer the intention.

Situation 1: The first 1:00 am hour is in file 1. The second 1:00 am hour is in file 2. File 2 is imported later and appended to the Datafile. In this case, the 1:00 am samples from File 1 will be overwritten with the 1:00 am samples from File 2.

Situation 2: Time does not appear to go backward. In this case, all 1:00 am samples will be recorded in the same hour.

Los Angeles daylight savings fall back transition is ambiguous

2016-11-06T00:00,7.0

2016-11-06T00:10,8.0

2016-11-06T00:40,1.0

2016-11-06T01:00,3.0 (1st 1:00 am hour intended)

2016-11-06T01:10,2.0 (1st 1:00 am hour intended)

2016-11-06T01:40,5.0 (2nd 1:00 am hour intended)

2016-11-06T01:55,4.0 (2nd 1:00 am hour intended)

2016-11-06T02:00,9.0

2016-11-06T02:15,4.0

2016-11-06T02:30,8.0

2016-11-06T02:45,9.0LenientDaylightSavings

The import has a LenientDaylightSavings option which impacts the handling of data logged in the vicinity of the spring forward daylight savings transition.

In the example above, the 2:00 AM hour doesn't exist during spring forward. Data logged properly in local time therefore should show the 2:00 AM hour as missing like the above examples do. However, we still see customer files with data in this non-existent hour. (How did it get there?) By default, Seeq will fail to import the data if it detects this problem. This gives the user a chance to make sure that they data is actually consistent with the time zone provided.

If the user would like to proceed despite having data in a non-existent hour, the lenientDaylightSavings option can be enabled in which case the data in the non-existent period will be interpreted as being in the period directly after it. For example, in the Los Angeles region, 2:00 AM data would be interpreted as 3:00 AM data. Examine the following datasets to understand the implications of this.

Example 1: Evenly spaced samples.

Because:

Samples are evenly spaced.

Seeq only keeps one sample per key. It keeps the last sample uploaded.

2:00 data is shifted to 3:00.

The 3:00 hour "overwrites" the 2:00 am hour. The 2:00 am hour is now missing.

Example 1: Evenly spaced samples in Los Angeles daylight savings spring forward

ORIGINAL FILE

MyTime,MyValue

2016-03-13T01:00,1.0 (1:00 am hour)

2016-03-13T01:15,2.0

2016-03-13T01:30,3.0

2016-03-13T01:45,4.0

2016-03-13T02:00,5.0 (2:00 am hour. This hour doesn't exist!)

2016-03-13T02:15,6.0

2016-03-13T02:30,7.0

2016-03-13T02:45,8.0

2016-03-13T03:00,9.0 (3:00 am hour)

2016-03-13T03:15,10.0

2016-03-13T03:30,11.0

2016-03-13T03:45,12.0

2016-03-13T04:00,13.0

DATA IN SEEQ

MyTime,MyValue

2016-03-13T01:00,1.0 (1:00 am hour)

2016-03-13T01:15,2.0

2016-03-13T01:30,3.0

2016-03-13T01:45,4.0

2016-03-13T03:00,9.0 (3:00 am hour)

2016-03-13T03:15,10.0

2016-03-13T03:30,11.0

2016-03-13T03:45,12.0

2016-03-13T04:00,13.0Example 2: Unevenly spaced samples.

Because:

Samples are unevenly spaced.

2:00 data is shifted to 3:00.

The 2:00 and 3:00 data is now interspersed.

Example 2: Unevenly spaced samples in Los Angeles daylight savings spring forward

ORIGINAL FILE

MyTime,MyValue

2016-03-13T01:00,1.0 (1:00 am hour)

2016-03-13T01:15,2.0

2016-03-13T01:30,3.0

2016-03-13T01:45,4.0

2016-03-13T02:05,5.0 (2:00 am hour. This hour doesn't exist!)

2016-03-13T02:20,6.0

2016-03-13T02:40,7.0

2016-03-13T02:50,8.0

2016-03-13T03:00,9.0 (3:00 am hour)

2016-03-13T03:15,10.0

2016-03-13T03:30,11.0

2016-03-13T03:45,12.0

2016-03-13T04:00,13.0

DATA IN SEEQ

MyTime,MyValue

2016-03-13T01:00,1.0 (1:00 am hour)

2016-03-13T01:15,2.0

2016-03-13T01:30,3.0

2016-03-13T01:45,4.0

2016-03-13T03:00,9.0 (3:00 am hour)

2016-03-13T03:05,5.0

2016-03-13T03:15,10.0

2016-03-13T03:20,6.0

2016-03-13T03:30,11.0

2016-03-13T03:40,7.0

2016-03-13T03:45,12.0

2016-03-13T03:50,8.0

2016-03-13T04:00,13.0Configuring Server Defaults

Import preferences that tend to vary by region can be configured for a Seeq server. Those settings can be found in the Configuration section of the Administration panel. For example, US servers may prefer the factory defaults of DayFirstDefault = false and DelimiterDefault = comma. German servers may prefer DayFirstDefault = true and DelimiterDefault = semicolon. Seeq does not need to be restarted for the changes to take effect. However, the browser may need to be reloaded to see the change in defaults.

Security considerations

Seeq will import the contents of the CSV files but keep no permanent copies of the file. If the file is not readable as a CSV file, it is rejected (thus protecting against uploading binary/executable files).

By default, data from CSV files is scoped to the Workbook and inherits the permissions of the Workbook.